ES5_3IngestNode

Ingest Node

Ingest Node提供了一种类似Logstash的功能:

- 预处理能力,可拦截Index或Bulk API的请求

- 对数据进行转换,并重新返回给Index或Bulk API

比如为某个字段设置默认值、重命名某个字段的字段名、对字段值进行Split操作

支持设置Painless脚本,对数据进行更加复杂的加工。

相对Logstash来说:

| - | Logstash | Ingest Node |

|---|---|---|

| 数据输入与输出 | 支持从不同的数据源读取,并写入不同的数据源 | 支持从ES REST API获取数据,并且写入ES |

| 数据缓冲 | 实现了简单的数据队列,支持重写 | 不支持缓冲 |

| 数据处理 | 支持大量插件、支持定制开发 | 内置插件,支持开发Plugin(但是添加Plugin需要重启) |

| 配置和使用 | 增加了一定的架构复杂度 | 无需额外部署 |

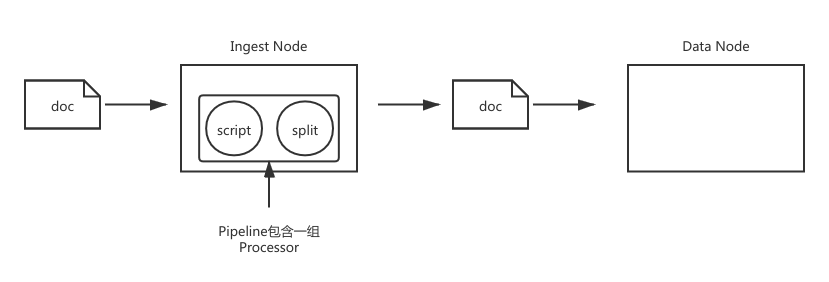

构建Ingest Node - Pipeline & Processor

- Pipeline

管道会对通过的数据(文档),按照顺序进行加工 - Processor

对加工的行为进行抽象封装

创建pipeline

为ES添加一个Pipeline:

1 | PUT _ingest/pipeline/blog_pipeline |

查看Pipeline:

1 | GET _ingest/pipeline/blog_pipeline |

测试Pipeline:

1 | POST _ingest/pipeline/blog_pipeline/_simulate |

- 可以看到tags被拆分成了数组

- 最终文档中新增了一个views字段

使用Pipeline更新文档:

1 | PUT tech_blogs/_doc/2?pipeline=blog_pipeline |

但是使用_update_by_query更新文档时可能会报错:

1 | POST /tech_blogs/_update_by_query?pipeline=blog_pipeline |

是因为对已经拆分过的字段再用split processor拆分,相当于要对数组类型的字段做字符串切分操作。

为了避免这种情况,可以通过加条件来忽略已经处理过的文档:

1 | POST tech_blogs/_update_by_query?pipeline=blog_pipeline |

构建pipeline

processor的种类比较多,这里列出一部分。

字段拆分 - split

ES的_ingest命令可以分析pipeline:

1 | POST _ingest/pipeline/_simulate |

- pipeline中只有一个processor,它将文档的tags字段按”,”拆分为数组

- 文档有一个tags字段,但是原始值中多个标签被拼成了一个字符串

字段值重置 - set

1 | POST _ingest/pipeline/_simulate |

- 添加文档时,使用processor set来增加一个新字段views